Austin Patel (austinpatel@berkeley.edu) (EECS Department, UC Berkeley)

NOTE: the RHOV hand-object reconstructions are from an earlier version of the work presented in the project here. Please reference this link for the most updated version of RHOV that has more accurate hand-object reconstructions compared to the preliminary version of RHOV used in this project.

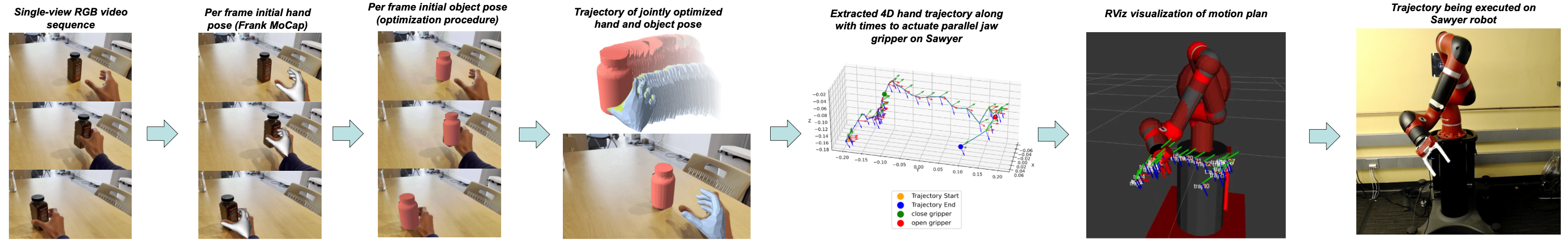

Reconstructing 3D hand and object poses from single-view 2D images is a challenging and under constrained problem given that no depth information is explicitly provided. Mutual hand and object occlusion further complicate this problem. In this work, we explore a joint hand object optimization procedure called RHOV to reconstruct 3D hand and object pose trajectories from videos. Results from this procedure are presented on video sequences from the 100 Days of Hands dataset (YouTube videos). We also demonstrate successful execution of the extracted hand trajectories on a Sawyer robotic arm including grasping to demonstrate the validity of the Track-HO4D procedure.

To accomplish this objective there are two main tasks: 1) Given a YouTube video of a human hand performing a manipulation task, extract the hand and object pose in 3D for each frame and 2) have a robotic arm imitate the extracted hand trajectory. For example, if video sequence shows a human picking up and moving and object, the robotic arm should replicate the exact trajectory and perform the same manipulation task, including grasping.

This project is interesting because past work has only shown extraction of hand and object pose using a complicated lab setup involving one or more depth cameras. These setups are more complex and are limited by the amount of data that is recorded on that lab setup. On the other hand, being able to extract both hand and object pose in 3D given only a single-view RGB video sequence would enable us extract useful information from places like YouTube videos. Many YouTube videos contain demonstrations of how humans interact with objects. Using extracted hand and object poses from large amounts of internet videos could be useful in reinforcement learning algorithms to help robots learn manipulation tasks.

The above diagram shows the full pipeline for extracting hand and object pose and executing trajectories on a Sawyer robotic arm.

There are two key aspects in this project: RHOV for extracting hand and object pose and secondly the code to execute extracted trajectories on the Sawyer robot arm.

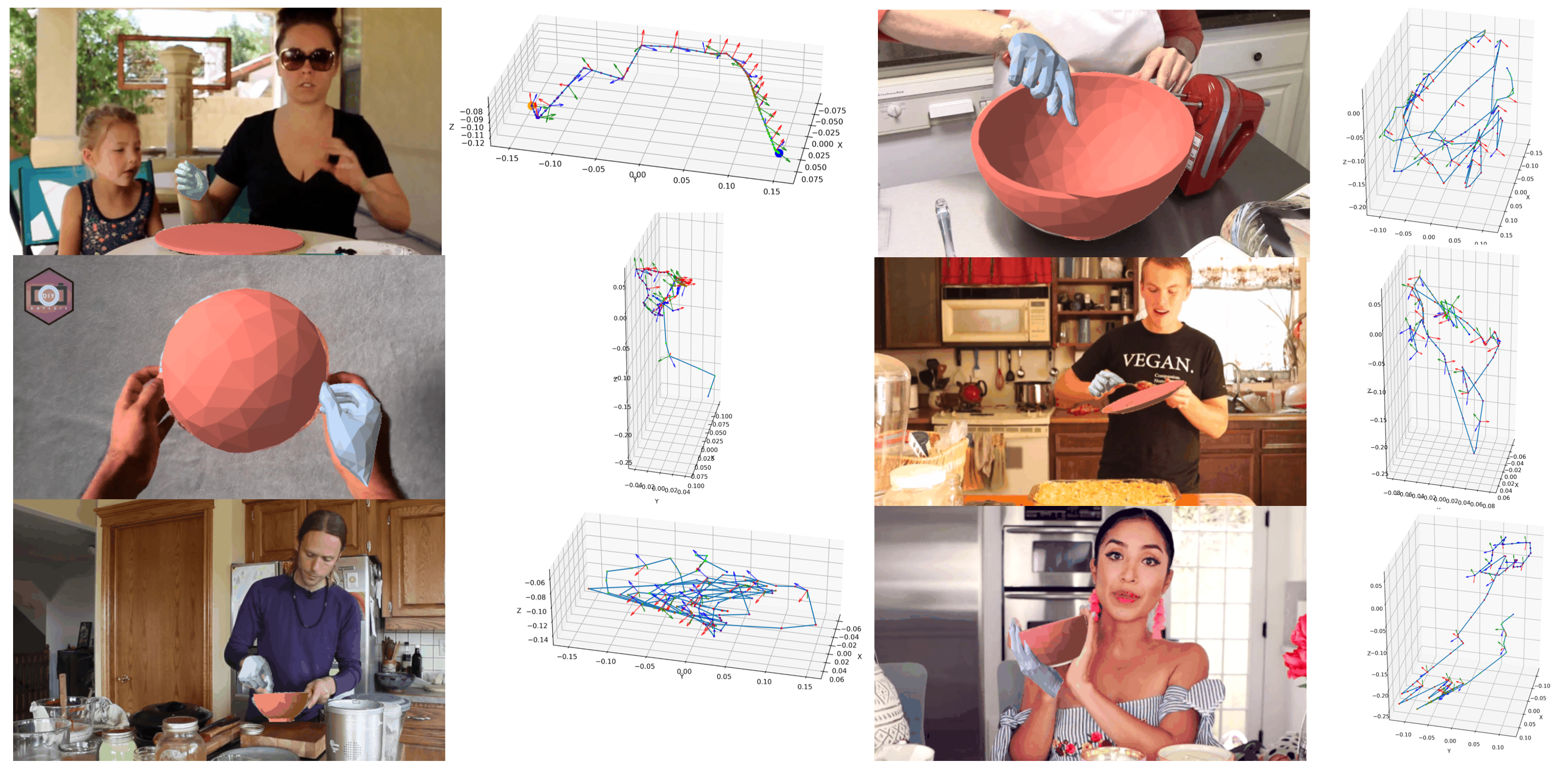

On a set of 15 YouTube videos, the RHOV procedure was able to successfully extract 3D hand and object poses for each frame of the video sequence. Here are a subset of those results:

The trajectory tracking was demonstrated to work on six YouTube videos with the end effector always pointing downward. Having the end effector match the extracted hand orientation was difficult due to limitations in the jointspace controller and sudden movements in the trajectory. The trajectory tracking also worked on a video of a pick and place task I recorded with my phone. For this video I was able to demonstrate the Sawyer arm following the orientation of the human hand extracted from the video, and I was also able to get the gripper to open and close when the hand opened and close in the video.

In summary, the Track-HO4D procedure successfully demonstrated the ability to take a single-view RGB video sequence of a manipulation task and execute that same trajectory on a Sawyer. Seven trajectories were successfully executed on the Sawyer robot. The robot was also able to open and close its gripper to match human grasping present in the input video.

Challenges were faced with RHOV when the object was substantially occluded by the hand or when the object has symmetries about one or more axis. The trajectory execution struggled with sudden movements in the trajectories and especially quick changes in the hand orientation.

One avenue for future improvement could be to use the four-fingered Allegro hand by performing a retargeting procedure in order to better replicate the human hand. Future work could also include creating a labeled dataset of YouTube videos with hand and object trajectories extracted using RHOV. Trajectories from this dataset could be used to construct reward functions for RL algorithms that help robots learn robotic manipulation tasks. One could imagine using a large-scale dataset of trajectories extracted from YouTube videos to learn a wide variety of policies for robotic manipulation tasks.